Hello everyone, a long time since the last time I wrote an article here. Not because I've not been doing tech stuff but because most of my time is dedicated to my job and my side projects and other activities have been mostly abandoned for a long time. My apologies.

However I can sometimes write guides on problems that I solve at work, for you and also for my future self so this is one of them. Currently we have many web applications on AWS, managed by Opsworks on a Chef 11 stack, everything configured and running. Everything OK.

Our current situation is at the time of writing this AWS is already supporting Chef 12 and some of the Chef 11 cookbooks are getting deprecated so I should move our applications to a Chef 12 stack as soon as possible. This article deals with the problems and solutions I find during this process.

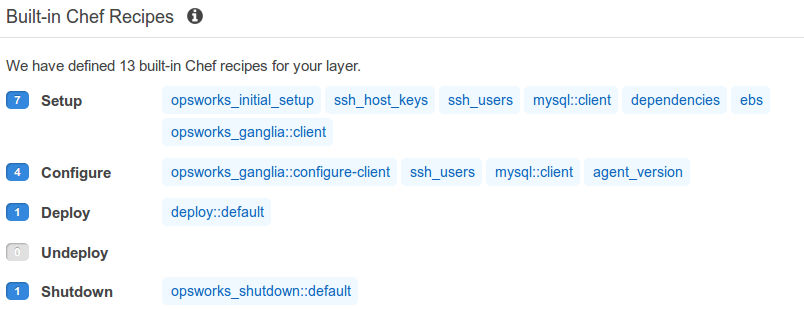

Setting up the Chef 12 stack and the application is pretty straightforward, the way of doing all this stuff is the same as doing it on the old Chef 11 stack, the problems come when setting up the recipes, basically because for Chef 12 there are no built-in recipes anymore.

This means we have to set every recipe ourselves.

There is a nice guide on creating the basics of Chef in this DigitalOcean documentation page. So we start by creating a new cookbook.

Once the cookbook is created, it should be managed by brekshelf, we can follow this article for setting it up.

Then we can start including our needed dependencies in the Berksfile, we can browse cookbooks on supermaket.chef.io.

Once the Berksfile has been populated, you can do a berks install for installing every dependency, then commit the changes and upload the git repository (as this cookbook is new the remote must be set).

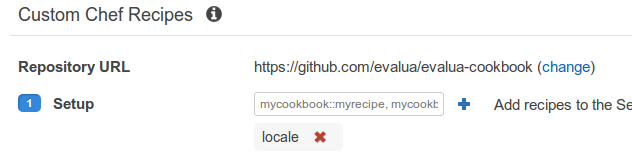

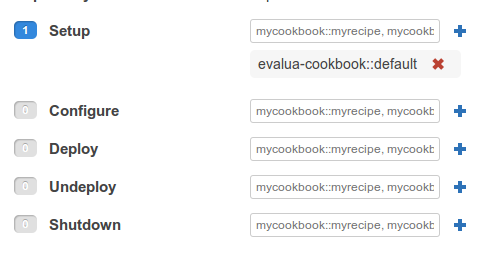

Next, going back to the Opsworks administration panel the stack recipes cookbook URL must be set, and from then we can start adding recipes to the different stages and then checking what works and what does not. For example the locale recipe.

To check what's going on we must check the chef logs, Amazon provides a nice document on this.

We can read that the setup process failed because the locale cookbook could not be found.

Why is that? Because as stated here:

In Chef 12 Linux, Berkshelf is no longer installed on stack instances. Instead, we recommend that you use Berkshelf on a local development machine to package your cookbook dependencies locally. Then upload your package, with the dependencies included, to Amazon Simple Storage Service. Finally, modify your Chef 12 Linux stack to use the uploaded package as a cookbook source. For more information, see Packaging Cookbook Dependencies Locally.

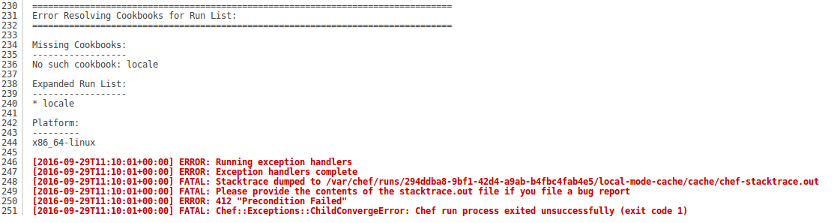

The git repo can't be the cookbook source anymore if using Berkshelf, we have to get into the cookbook repository on our development machine, and then package it with berks package cookbooks.tar.gz

And then upload the result to Amazon S3 for later pointing the cookbook source to this S3 file.

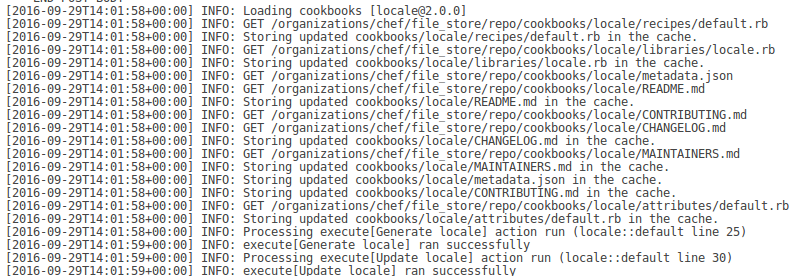

If we check the logs now, the locale cookbook will be found and successfully run and therefore the machine will be up and running.

So once we've found the method for loading and running cookbooks using Berkshelf we just need to require all of them.

During this process I realized that my lack of Chef knowledge won't let me achieve my goal, so I went back to the basics and started by following this guide on how to manage a web application using Chef and I learned how to use kitchen, writing custom recipes, testing and deploying. It seems that using Chef is more like developing and deploying than clicking around on a administration panel website, and I like that!

At this point I started including package by package all the required stuff for running our Laravel applications and then testing it. First apt, then MySQL, then Apache and so... Basically the workflow is first getting the cookbook from the Chef Supermarket, then reading it's documentation run the desired recipes and customizations (all triggered from a default recipe), then testing the whole thing using kitchen, and finally pack and upload the whole thing to S3 to finally test it on the EC2 machine. The default recipe then is the only thing invoked in the Setup stage for the Amazon Stack.

As I could wait no more I tried to deploy my application, the deploy was successful but as I expected, nothing happened, the application is not running or even installed. There is still stuff to do, because as you can see on the previous picture, there is no deploy recipe, so AWS does not know what to do when deploying an application, you have to write it.

Now I started writing my custom deploy recipe step by step, trying to deploy it, checking the logs and incrementally adding more functionality.

I found this nice and useful trick on serverfault to get a ruby interactive shell on the Opsworks machine, with Opsworks data bags already loaded. Also don't forget to execute the update_custom_cookbooks command in order to effectively apply the cookbook changes after uploading a new version to S3.

Finally I ended up with these recipes, but this can be different depending on your application and server structures.

setup.rb

include_recipe 'apt::default'

include_recipe 'locale'

include_recipe 'custom-cookbook::database'

include_recipe 'php'

include_recipe 'nginx'

# using apt

package "php7.0-mysql" do

action :install

end

package "php7.0-fpm" do

action :install

end

deploy.rb

include_recipe 's3_file'

tag = 'latest'

app = search("aws_opsworks_app","deploy:true").first

app_dir = "/srv/www/#{app[:shortname]}"

tmp_dir = "/tmp/opsworks/#{app[:shortname]}"

# download the app artifact

artifact = "/tmp/#{app[:shortname]}.zip"

s3_file "#{artifact}" do

remote_path "#{app[:name]}.zip"

bucket "opsworks"

s3_url "https://s3-eu-west-1.amazonaws.com/opsworks"

aws_access_key_id app[:app_source][:user]

aws_secret_access_key app[:app_source][:password]

end

directory "#{tmp_dir}" do

mode "0755"

recursive true

end

execute "unzip #{artifact} -d #{tmp_dir}"

execute 'create git repository' do

cwd "#{tmp_dir}"

command "find . -type d -name .git -exec rm -rf {} \\;; find . -type f -name .gitignore -exec rm -f {} \\;; git init; git add .; git config user.name 'AWS OpsWorks'; git config user.email 'root@localhost'; git commit -m 'Create temporary repository from downloaded contents.'"

end

deploy "#{app_dir}" do

repository tmp_dir

user "root"

group "root"

environment app[:environment]

symlink_before_migrate({})

end

directory "#{app_dir}/current/.git" do

recursive true

action :delete

end

nginx_site "#{app[:shortname]}" do

template "nginx-template.conf.erb"

enable true

variables({

:domains => app[:domains].first,

:root => "#{app_dir}/current/#{app[:attributes][:document_root]}"

})

end

service "nginx" do

action :reload

end

# clean up

file "#{artifact}" do

action :delete

backup false

end

directory "#{tmp_dir}" do

recursive true

action :delete

end

This allowed us to deploy using Chef 12 some of our web applications, and also as I updated them before, make use of all the advantages of PHP7.

I wish I could extend this even more, because as I mentioned earlier I've noticed Chef deployment can be made much much better making use of testing and continuous integration, and also can be tweaked a lot, but unfortunately I have to leave it as it is for now and focus on other tasks my job demands, anyway It was interesting learning this.

See you next time!