These last weeks I did learn a lot about HTTPS Certificates, the most important lesson: Don't underestimate HTTPS Certificates because misconfiguration and other problems can make Nginx not to start, they can block your users from accessing your site, and would bring a lot stress to your team and yourself.

We were not really paying much attention to how our certificates work, how they were generated, installed or configured because someone else did it for us and the whole thing seemed to be working...Until last month when the certificates we had started to expire and the guy in charge of that was no longer working here, so I had to get directly into the mud and renew and update our certificates.

The first solution

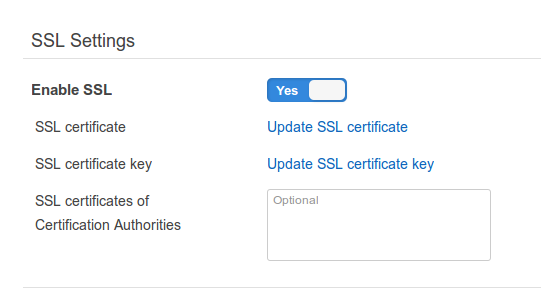

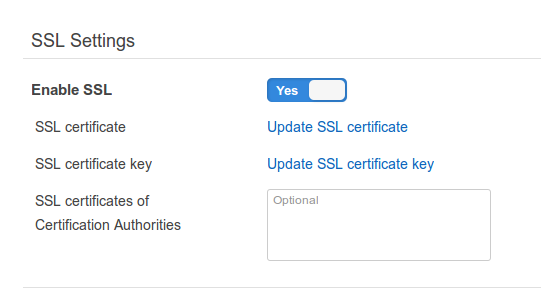

After fighting a bit, as I had to deal with our custom Chef 12 recipes not so long ago I was used to writing custom Chef recipes so I ended up orchestrating a way of manually copying the content of the full chain certificate and the key on these SSL configuration fields on the Opswork's application management page.

And then let Chef read that configuration so depending on what is set on those fields it would pick a custom SSL Nginx template written for the site and then create the SSL certificate and key on the filesystem.

It was working fairly good and our main urgency was attended. We could then start deploying new releases, creating or destroying load, time and standard instances and the application would not crash and all the user connections would go through HTTPS.

Of course, bad things sometimes happen, and this setup had a small downside. We have many applications using several certificates, so this certificate expiration incident affected a lot of users and caused a lot of stress around here, we did not want to repeat this.

Even worse, exactly one week after we discovered that our CA had been distrusted by Mozilla and Google like three months ago and we did not know.

We found out that day because a lot of our users started to receive automatic updates on their web browsers and they could no longer access the application as the distrust measures were applied on that update, we were on trouble again.

The current solution

We were in a difficult situation so it was the perfect time to solve the problem while at the same time moving forward. So in the aftermath of the incidents we were having we decided to go for the brand new SSL solution Let's Encrypt.

Unless you've been living under a rock, if you belong to the world of web application development you have probably heard about this, this project aims to offer free CA server while at the same time a set of tools for automatically manage certificate generation and renewal. Sounds awesome, and it was very promising because if we could make this work, we would no longer suffer the process of certificate management that was a pain and involved a lot of manual steps I hated through our old distrusted CA website.

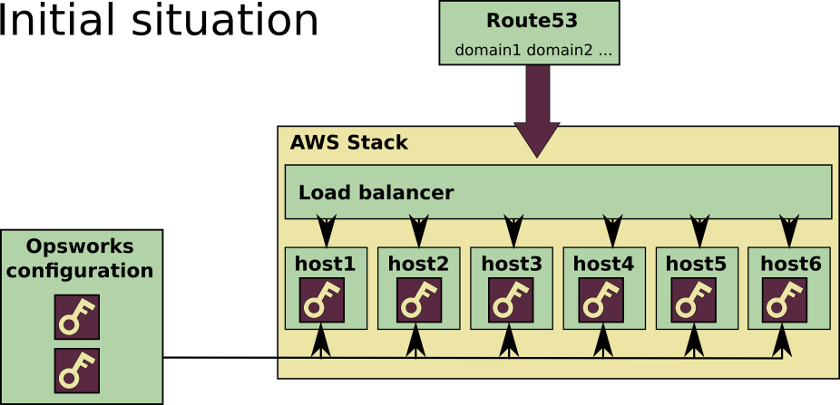

The challenge came right after reading the official documentation and making some tests for setting HTTPS on some individual machines for testing purposes. It is very easy to do but it is not easy to integrate it by using a Chef recipe and firing it during the AWS Opsworks stack lifecycle despite there are already tools for making these process easier for you like this acme-chef cookbook I tried to use I still had lots of unanswered questions like, "How do I share the key on every instance?" "What instance would perform the key renewal if necessary?" "How will those keys propagate among the instances?", also for the key generation, the instance must serve files under a directory called /.well-known/ and then those files must be accessed by pointing at the domain you are intended to validate, as there is a load balancer in between, you don't know what machine are you going to end up when pointing at the domain so I started looking for answers to these questions.

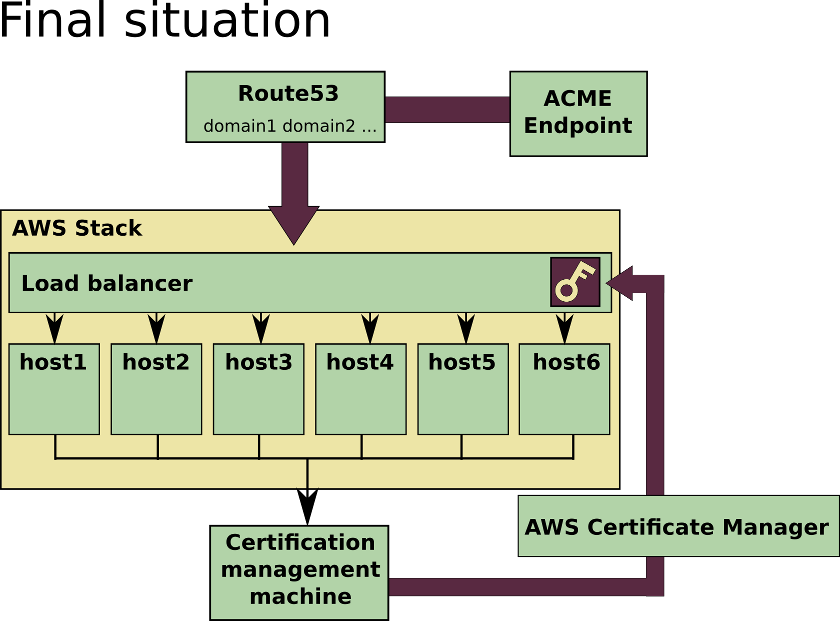

I was lucky enough to find a post by Fabian Arrotin called Generating multiple certificates with Letsencrypt from a single instance , this post was extremely helpful not just because it explains a way to overcome most of the problems we had by using an architecture that made much more sense to me, but also because he details the implementation for Nginx. I created an empty Ubuntu machine directly on EC2 with the permissions and tools needed for executing letsencrypt, gave it an http address on Route53 and modified our usual Nginx template for including the snippet from Fabian's post.

location /.well-known/ {

proxy_pass http://certification.domain.com/.well-known/ ;

}

This snippet would make every host on the stack to redirect the /.well-known ACME calls to the EC2 machine and the certificates for that domain would be correctly generated there, no matter what machine did the petition end up after going through the balancer. This allowed me to generate certificates for the domains of every application, stored on that EC2 certification machine by executing this command:

letsencrypt certonly --webroot --webroot-path /var/www/html --manual-public-ip-logging-ok --agree-tos --email you@domain.com -d sub2.domain.com -d sub3.domain.com

This was looking good, but I still needed a way to automatically distribute those keys to all the instances in the stack, and more important, also this distribution process should fire when the certificates were created or updated. At that point thanks to my colleague carlosafonso I learned that storing they key multiple times on every machine was a very silly decision I made, because of TLS Termination there is no need to set HTTPS and keys everywhere, and it's not significantly more secure than just setting HTTPS on the load balancer, so the distribution process would be a bit easier.

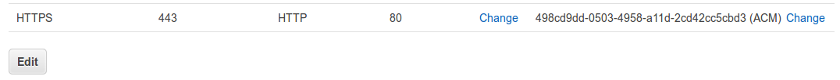

For the distribution process I fast coded a couple of python scripts, these scripts would make use of boto for "speaking" with AWS and in case the certificate requested by letsencrypt has changed, or if there is a new certificate, will send it to the AWS Certificate Manager and set it on the desired load balancer. The script would assume of course some things regarding to the stack design, but as we control that and we are happy with our current design it's ok.

Also for storage, the script makes use of a json file structured to record stack, certificate and load balancer name. The json file has the following syntax:

{"servers": [

{

"certificateArn": "arn:aws:acm:someCertificateArn",

"domain": "domain1.com",

"balancerName": "loadBalancer1"

}

]}

And then the script would be like this:

import json

import boto3

configurationFile = open('certificates-configuration.json', 'r')

configurationFileData = json.load(configurationFile)

configurationFile.close()

configurationFileUpdated = False

for server in configurationFileData['servers']:

#Open the current certificate on the system

certificate=open('/etc/letsencrypt/live/'+server['domain']+'/cert.pem', 'rb').read()

privatekey=open('/etc/letsencrypt/live/'+server['domain']+'/privkey.pem', 'rb').read()

chain=open('/etc/letsencrypt/live/'+server['domain']+'/chain.pem', 'rb').read()

#Check the current certificate on AWS

acmClient = boto3.client('acm');

currentCertificateArn = server['certificateArn']

currentCertificate = ""

if currentCertificateArn != "":

response = acmClient.get_certificate(

CertificateArn = currentCertificateArn

)

currentCertificate = response['Certificate']

#Compare the certificates and if they don't match upload the new one

if currentCertificate != certificate:

print('Uploading the certificate')

response = acmClient.import_certificate(

Certificate=certificate,

PrivateKey=privatekey,

CertificateChain=chain

)

certificateArn = response['CertificateArn'];

elbClient = boto3.client('elb')

print('Setting the certificate for the load balancer')

response = elbClient.set_load_balancer_listener_ssl_certificate(

LoadBalancerName=server['balancerName'],

LoadBalancerPort=443,

SSLCertificateId=certificateArn

)

server['certificateArn'] = certificateArn

configurationFileUpdated = True

if configurationFileUpdated:

configurationFile = open('certificates-configuration.json','w+')

configurationFile.write(json.dumps(configurationFileData))

configurationFile.close()

That script is in charge of keeping synchronized the uploaded certificate on the load balancer, comparing it against the letsencrypt live certificates on this certification machine.

I also wrote this small piece of Python code for adding records on the json file:

import json

import sys

if len(sys.argv) != 3:

print "Usage: python add_new_config.py <domain-name> <balancer-name>"

exit()

configurationFile = open('evalua-certificates-configuration.json', 'r')

configurationFileData = json.load(configurationFile)

configurationFile.close()

newConfig = {'certificateArn' : '', 'domain' : sys.argv[1], 'balancerName' : sys.argv[2]}

configurationFileData["servers"].append(newConfig);

configurationFile = open('evalua-certificates-configuration.json','w+')

configurationFile.write(json.dumps(configurationFileData))

configurationFile.close()

Finally, I created two bash scripts, this first one to add and deploy a new certificate without knowing too much how internally things work:

#!/bin/bash

if [[ $EUID -ne 0 ]]; then

echo "This script should be run using sudo or as the root user"

exit -1

fi

newCertificateCommand="letsencrypt certonly --webroot --webroot-path /var/www/html --manual-public-ip-logging-ok --agree-tos --email your.email@domain.com"

echo -n "Type the list of domains for the certificate separated by whitespaces:"

read domains

echo -n "Type the name of the balancer where the certificate will be installed:"

read balancer

for domain in $domains

do

parameter=" -d $domain "

newCertificateCommand=$newCertificateCommand$parameter

done

eval $newCertificateCommand

domainArray=( $domains )

python add_new_config.py ${domainArray[0]} $balancer

./auto-renew-certificates

Meant to be just executed it and then the script tells you what to do.

And this next one used by the script above and meant to be executed periodically as a cron job:

#!/bin/bash

if [[ $EUID -ne 0 ]]; then

echo "This script should be run using sudo or as the root user"

exit -1

fi

letsencrypt renew

python upload_certificate_if_needed.py

Will make letsencrypt update the certificates if needed and finally execute the python script to synchronize the load balancers.

This is the schema of how the whole thing ended up:

Finishing touches

Now that everything is ready, I still have to deal with three problems, first healtchecking on the load balancers, I created a default site on nginx for dealing with this separated from our applications, the default nginx file is very simple:

server {

location /elb-status {

access_log off;

return 200;

}

}

And then I pointed every load balancer to check the health for the machine on that elb-status path.

The second one was HTTPS redirection. At that moment when writing the address with http instead of https the user was not automatically redirected. As the load balancer is making a port redirection, most of the snippets you may find for achieving this using Nginx would create a redirect loop. So you have also to take a look to the headers added to the request by the AWS Load Balancers. Nginx should not redirect if the X-Forwarded-Proto header is already set to HTTPS:

location / {

if ($http_x_forwarded_proto != 'https') {

rewrite ^ https://$host$request_uri? permanent;

}

}

And finally, our applications make use of a custom Laravel signing algorithm for security. This algorithm makes use of the request URL on the source and on the destination machine among many other things. I had to make small modifications on it for accessing and taking in consideration the X-Forwarded-Proto header when signing. Worked like a charm!.

And that's it! For the moment this is how I tackled the problems we were having related to certificates and hopefully this would let us forget about most of the certificate management work as it is automated.

This is the solution I implemented but I can't ensure this is optimal, however it covers all our requirements, if you find yourself in a similar situation I hope you find it useful. Finally if you see some flaw or some over complication in this solution I would love to know so I can improve it, therefore comments and opinions are really appreciated.